New Netgear Switch, OPNsense and Vlans (Day 2)

2025-01-11

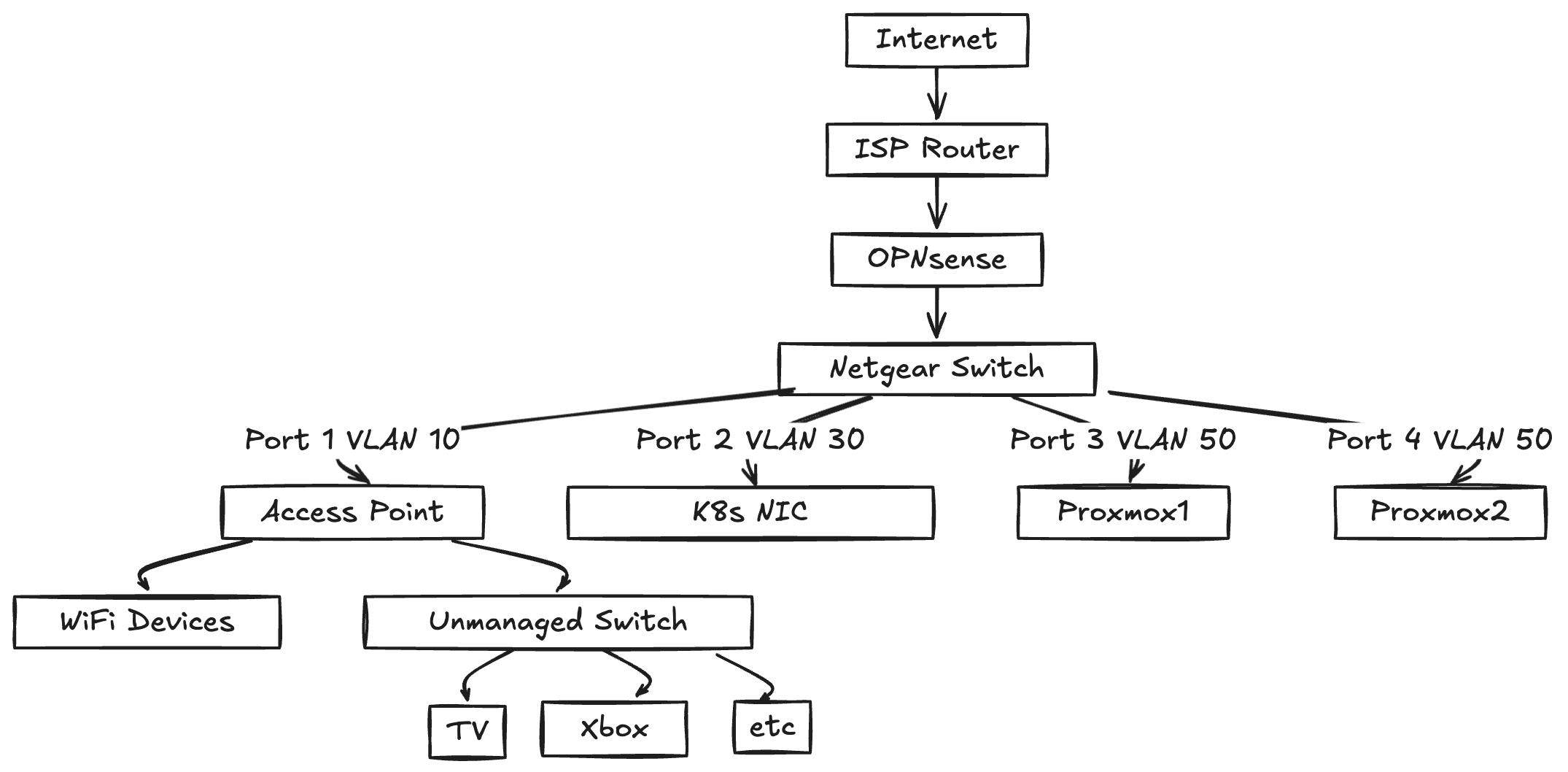

I got a new Netgear managed switch yesterday and so today was all about network segmentation and fighting with OPNsense. Also learned some interesting bits about Proxmox clustering that I didn’t expect to deal with.

First up, got OPNsense reinstalled on the N100 box - fresh start for the VLAN setup. The interesting bit was that OPNsense runs as a VM on one of my Proxmox nodes, but needs to handle traffic for Proxmox’s management interface itself. Not exactly a chicken-and-egg problem since they’re on different NICs, but took some time to get right.

VLANs setup:

VLAN 10: Home Network (192.168.10.0/24)

- All regular home devices (e.g Xbox, laptops, phones etc)

- Another unmanaged switch connects here for more devices

VLAN 50: Core Services/Proxmox (192.168.50.0/24)

- Proxmox management IPs

- AdGuard Home

- Traefik

- Any other "always on" services

VLAN 30: Kubernetes (192.168.30.0/24)

- K8s control plane

- K8s workers

- K8s services

- etc

And then I stumbled across it, the issue with my Proxmox cluster.

When one node is offline, the other wouldn’t boot the VMs because of quorum requirements. Not ideal for a homelab where I might want to take nodes down frequently and need the OPNsense VM to be available as soon as it starts.

After some googling and finding posts talking about running something like opnsense on a different node, etc etc, all not exactly an option for me.

So the solution was a simple systemd service that sets quorum expectations to 1 on boot:

[Unit]

Description=Set Proxmox quorum expectations

After=corosync.service pve-cluster.service

Requires=corosync.service pve-cluster.service

[Service]

Type=oneshot

RemainAfterExit=yes

ExecStartPre=/bin/sleep 10

ExecStart=/usr/bin/pvecm expected 1

[Install]

WantedBy=multi-user.target

This lets me boot a single node without the cluster throwing a fit about quorum. Might not be production-grade, but perfect for a homelab where I just need the main services to be available as soon as possible.