Buildlog genesis - Single control-plane kubernetes homelab

2024-11-06

So I decided to build a Kubernetes cluster at home. Sure, I could say it’s mainly for self-hosting + probably ML (I will need to add a GPU node) workloads I want to experiment with, but honestly, I’ve always wanted a homelab to tinker with. Having a proper test environment to break things in beats trying stuff out in production plus is plenty satisfying.

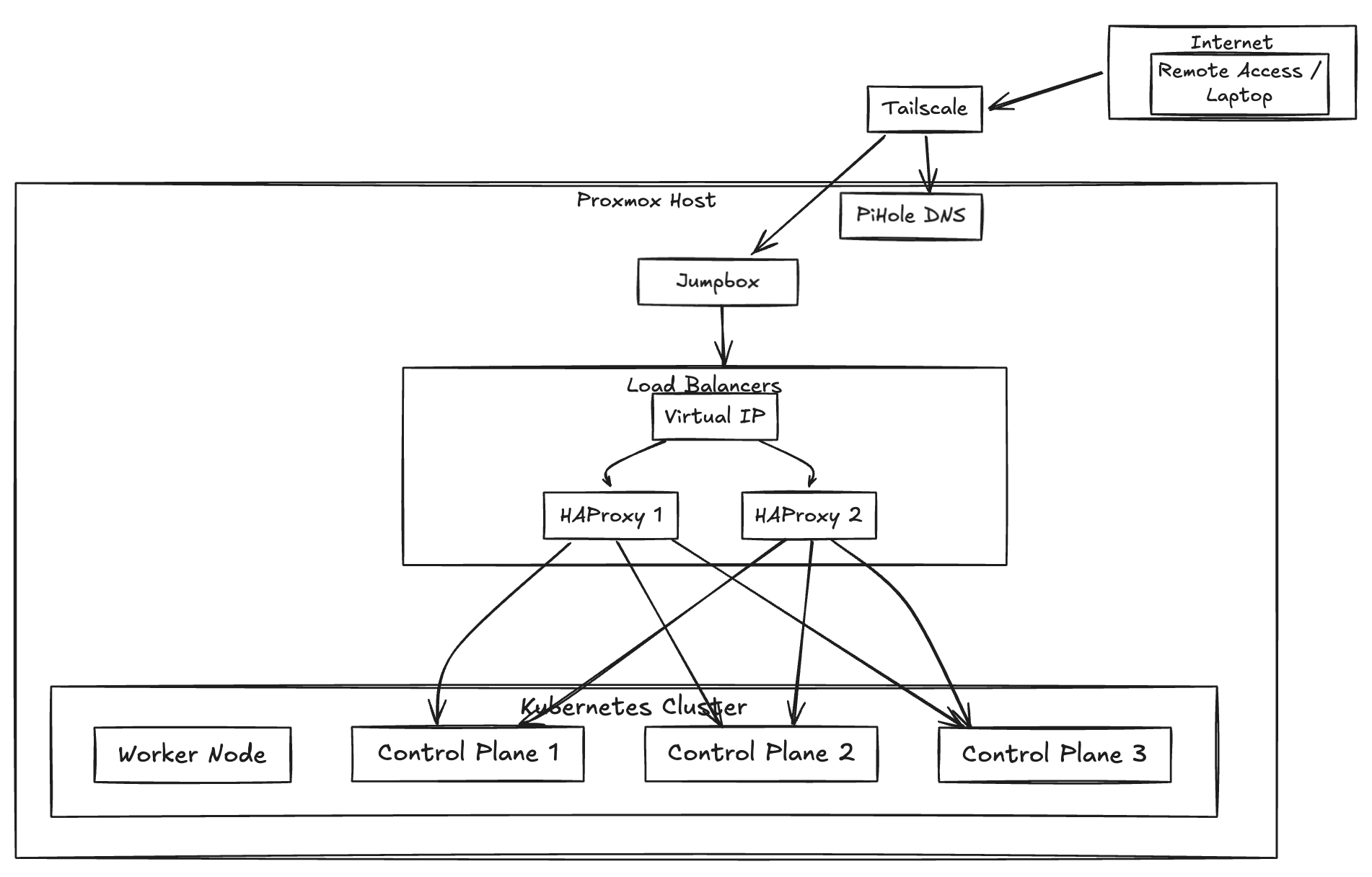

The plan

- 1 control plane node (future HA, but let’s not get ahead of ourselves)

- 3 worker nodes (because why not)

- IP address management (MetalLB)

- I plan to use traefik later as the ingress controller

- A way to manage it all (enter Terraform/Tofu and Ansible)

The Build

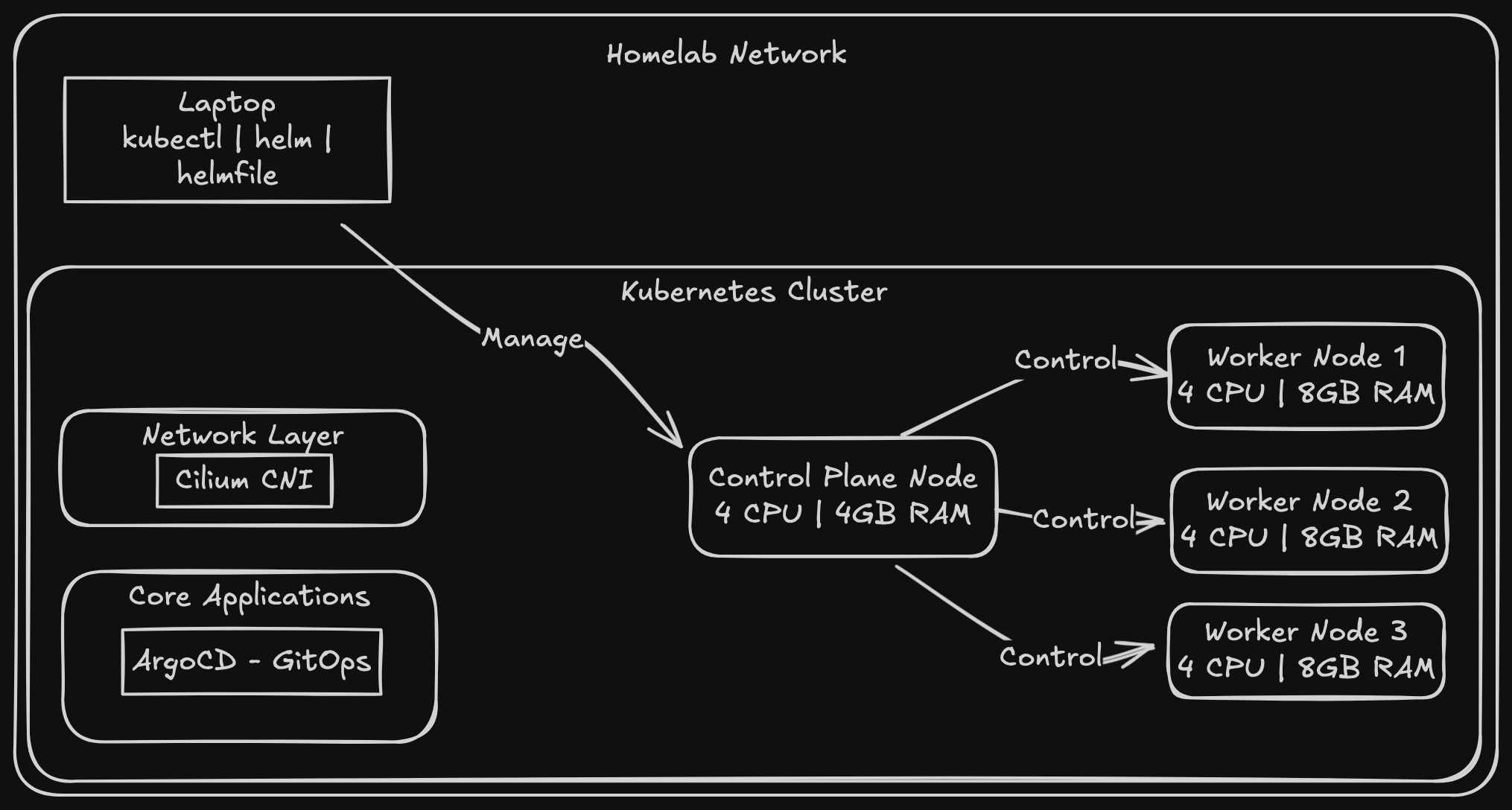

VM specifications

These are just what I chose for now, but I’ll modify them as needed

The controlplane has:

- num_cpus = 4

- memory = 4GB

- disk = 50GB

The worker nodes have:

- num_cpus = 4

- memory = 8GB

- disk = 100GB

Infrastructure Time

I use OpenTofu (you can replace it with Terraform), and a custom provider that runs a bunch of CLI commands to provision the instances.

In tofu land:

module "cp_nodes" {

source = "./modules"

cpus = var.cp_compute_properties.cp_num_cpus

memory = var.cp_compute_properties.cp_memory

disk = var.cp_compute_properties.cp_disk

instance_addresses = var.control_plane_instance.cp_ip_addresses

instance_nodes = var.control_plane_instance.cp_instance

cloudinit_file = "${path.module}/setup/user_data.cfg"

hosts = "${path.module}/setup/hosts"

setup_script = "${path.module}/setup/setup.sh"

image = var.ubuntu_image

}

module "worker_nodes" {

source = "./modules"

cpus = var.worker_compute_properties.worker_num_cpus

memory = var.worker_compute_properties.worker_memory

disk = var.worker_compute_properties.worker_disk

instance_addresses = var.worker_nodes_instance.worker_ip_addresses

instance_nodes = var.worker_nodes_instance.worker_instance

cloudinit_file = "${path.module}/setup/user_data.cfg"

hosts = "${path.module}/setup/hosts"

setup_script = "${path.module}/setup/setup.sh"

image = var.ubuntu_image

}

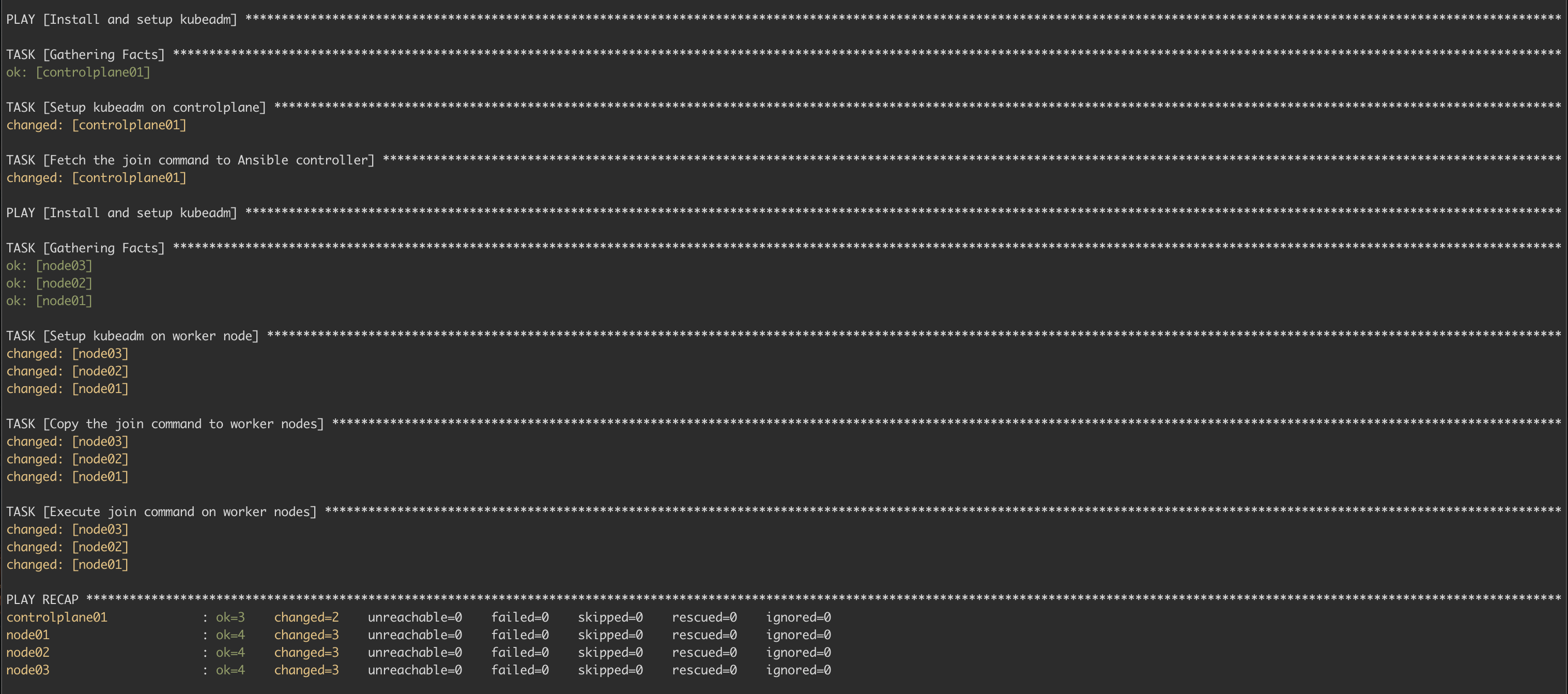

Kubernetes Bootstrap - Enter Ansible

First sign of life - nodes responding (always start with a ping test):

➜ ~ ansible all -m ping -i inventory.ini

node02 | SUCCESS => {

"changed": false,

"ping": "pong"

}

controlplane01 | SUCCESS => {

"changed": false,

"ping": "pong"

}

node03 | SUCCESS => {

"changed": false,

"ping": "pong"

}

node01 | SUCCESS => {

"changed": false,

"ping": "pong"

}

After manually setting it up (running into issues and finally figuring it out, I will probably note it somewhere one day) and tearing it down a couple of times the next logical step was to use ansible to set this all up for me.

My inventory:

[controlplanes]

controlplane01

[nodes]

node01

node02

node03

and playbook

- name: Install and setup kubeadm

hosts: controlplanes

tasks:

- name: Setup kubeadm on controlplane

ansible.builtin.script: kubeadm.sh -controlplane

register: results

# - debug:

# var: results.stdout

- name: Fetch the join command to Ansible controller

ansible.builtin.fetch:

src: /tmp/kubeadm_join_cmd.sh

dest: "{{ playbook_dir }}/files/kubeadm_join_cmd.sh"

flat: yes

- name: Install and setup kubeadm

hosts: nodes

tasks:

- name: Setup kubeadm on worker node

ansible.builtin.script: kubeadm.sh

register: results

# - debug:

# var: results.stdout

- name: Copy the join command to worker nodes

ansible.builtin.copy:

src: "{{ playbook_dir }}/files/kubeadm_join_cmd.sh"

dest: /tmp/kubeadm_join_cmd.sh

mode: '0755'

- name: Execute join command on worker nodes

ansible.builtin.command:

cmd: sudo /tmp/kubeadm_join_cmd.sh

and the shell script kubeadm.sh (i am not particularly any good at shell scripts)

ARG=$1

sudo swapoff -a

# Load required kernel modules

sudo modprobe overlay

sudo modprobe br_netfilter

# Persist modules between restarts

cat <<EOF | sudo tee /etc/modules-load.d/k8s.conf

overlay

br_netfilter

EOF

# Set required networking parameters

cat <<EOF | sudo tee /etc/sysctl.d/k8s.conf

net.bridge.bridge-nf-call-iptables = 1

net.bridge.bridge-nf-call-ip6tables = 1

net.ipv4.ip_forward = 1

EOF

# Apply sysctl params without reboot

sudo sysctl --system

sudo apt-get install -y containerd

sudo mkdir -p /etc/containerd

containerd config default | sed 's/SystemdCgroup = false/SystemdCgroup = true/' | sudo tee /etc/containerd/config.toml

sudo systemctl restart containerd

KUBE_LATEST=$(curl -L -s https://dl.k8s.io/release/stable.txt | awk 'BEGIN { FS="." } { printf "%s.%s", $1, $2 }')

sudo mkdir -p /etc/apt/keyrings

curl -fsSL https://pkgs.k8s.io/core:/stable:/${KUBE_LATEST}/deb/Release.key | sudo gpg --dearmor -o /etc/apt/keyrings/kubernetes-apt-keyring.gpg --yes

echo "deb [signed-by=/etc/apt/keyrings/kubernetes-apt-keyring.gpg] https://pkgs.k8s.io/core:/stable:/${KUBE_LATEST}/deb/ /" | sudo tee /etc/apt/sources.list.d/kubernetes.list

sudo apt-get update

sudo apt-get install -y kubelet kubeadm kubectl

sudo apt-mark hold kubelet kubeadm kubectl

sudo crictl config \

--set runtime-endpoint=unix:///run/containerd/containerd.sock \

--set image-endpoint=unix:///run/containerd/containerd.sock

cat <<EOF | sudo tee /etc/default/kubelet

KUBELET_EXTRA_ARGS='--node-ip ${PRIMARY_IP}'

EOF

if [ "$ARG" = "-controlplane" ]; then

POD_CIDR=10.244.0.0/16

SERVICE_CIDR=10.96.0.0/16

LB_IP=$(getent hosts lb | awk '{ print $1 }')

NODE_HOSTNAME=$(hostname)

# Initialize only if it's the primary control plane

if [ "$NODE_HOSTNAME" = "controlplane01" ]; then

sudo kubeadm init --pod-network-cidr $POD_CIDR \

--service-cidr $SERVICE_CIDR --apiserver-advertise-address $PRIMARY_IP \

--apiserver-cert-extra-sans lb > /tmp/kubeadm_init_output.txt

# Capture join command for other control planes

cat /tmp/kubeadm_init_output.txt | grep "kubeadm join" -A 1 > /tmp/kubeadm_join_cmd.sh

chmod +x /tmp/kubeadm_join_cmd.sh

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

else

# For additional control planes later

echo "TODO: modify the join command to join as a control plane"

fi

fi

Running an ansible-playbook -i inventory.ini playbook.yaml which uses the shell script and the playbook to set up the control plane and workers.

Copying the kubeconfig to my laptop scp -i ~/.ssh/labkey ubuntu@controlplane01:~/.kube/config ~/.kube/config

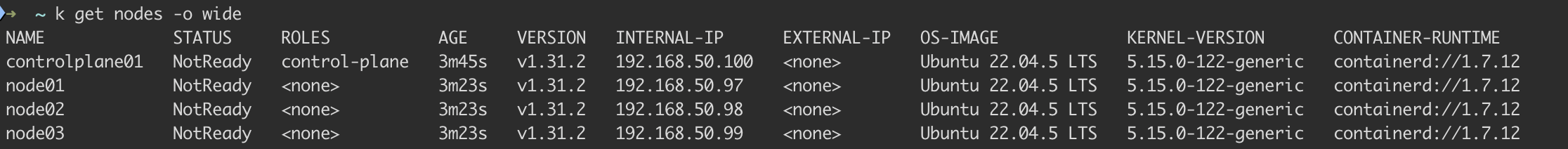

Finally, the moment of truth:

Current progress

- Nodes responding

- Basic connectivity working

- kubectl get nodes shows nodes (NotReady nodes but they show up)

The nodes needed a CNI solution to get them Ready. While setting up the CNI, I figured I might as well install MetalLB and ArgoCD too.

I chose Cilium after seeing it at KubeCon Paris this year - after trying it out, I was so impressed it became my go-to CNI solution.

Instead of using kubectl apply (so 2020! Just picking a random year in the past), I went for a more everything as code approach with Helmfile - a declarative spec for deploying helm charts.

The process is straightforward:

- create a

helmfile.yaml - add your charts

- run

helmfile apply, and wait for the magic to happen.

The helmfile contents:

repositories:

- name: argo

url: https://argoproj.github.io/argo-helm

- name: cilium

url: https://helm.cilium.io/

- name: metallb

url: https://metallb.github.io/metallb

releases:

- name: argocd

namespace: argocd

chart: argo/argo-cd

version: 7.7.0

values:

- server:

extraArgs:

- --insecure

- name: cilium

namespace: kube-system

chart: cilium/cilium

version: 1.16.3

- name: metallb

namespace: metallb-system

chart: metallb/metallb

version: 0.14.8

a few seconds to a minute later and … She’s alive!

k get nodes -o wide

NAME STATUS ROLES AGE VERSION INTERNAL-IP EXTERNAL-IP OS-IMAGE KERNEL-VERSION CONTAINER-RUNTIME

controlplane01 Ready control-plane 11m v1.31.2 192.168.50.100 <none> Ubuntu 22.04.5 LTS 5.15.0-122-generic containerd://1.7.12

node01 Ready <none> 10m v1.31.2 192.168.50.97 <none> Ubuntu 22.04.5 LTS 5.15.0-122-generic containerd://1.7.12

node02 Ready <none> 10m v1.31.2 192.168.50.98 <none> Ubuntu 22.04.5 LTS 5.15.0-122-generic containerd://1.7.12

node03 Ready <none> 10m v1.31.2 192.168.50.99 <none> Ubuntu 22.04.5 LTS 5.15.0-122-generic containerd://1.7.12

and the container creation problem is gone

➜ ~ k get po -A

NAMESPACE NAME READY STATUS RESTARTS AGE

argocd argocd-application-controller-0 1/1 Running 0 2m33s

argocd argocd-applicationset-controller-858cddf5cb-pfffm 1/1 Running 0 2m34s

argocd argocd-dex-server-f7794994f-8r5sg 1/1 Running 0 2m34s

argocd argocd-notifications-controller-d988b477c-95g5m 1/1 Running 0 2m34s

argocd argocd-redis-5bd4bbb-5jkg5 1/1 Running 0 2m34s

argocd argocd-repo-server-6d9f6bd866-4kkcv 1/1 Running 0 2m34s

argocd argocd-server-7b6f64dd8d-d7rlv 1/1 Running 0 2m34s

kube-system cilium-82nxh 1/1 Running 0 3m25s

kube-system cilium-82qdp 1/1 Running 0 3m25s

kube-system cilium-8hnqm 1/1 Running 0 3m25s

kube-system cilium-8l9g5 1/1 Running 0 3m25s

kube-system cilium-envoy-gppg7 1/1 Running 0 3m25s

kube-system cilium-envoy-qdpwc 1/1 Running 0 3m25s

kube-system cilium-envoy-s4js7 1/1 Running 0 3m25s

kube-system cilium-envoy-vbhnv 1/1 Running 0 3m25s

kube-system cilium-operator-b4bfbfd9c-6cr4q 1/1 Running 0 3m25s

kube-system cilium-operator-b4bfbfd9c-mvhnd 1/1 Running 0 3m25s

kube-system coredns-7c65d6cfc9-4pt49 1/1 Running 0 11m

kube-system coredns-7c65d6cfc9-8qn75 1/1 Running 0 11m

kube-system etcd-controlplane01 1/1 Running 0 11m

kube-system kube-apiserver-controlplane01 1/1 Running 0 11m

kube-system kube-controller-manager-controlplane01 1/1 Running 0 11m

kube-system kube-proxy-2dr96 1/1 Running 0 10m

kube-system kube-proxy-2s96q 1/1 Running 0 10m

kube-system kube-proxy-kwn5v 1/1 Running 0 10m

kube-system kube-proxy-qlfb2 1/1 Running 0 11m

kube-system kube-scheduler-controlplane01 1/1 Running 0 11m

metallb-system metallb-controller-6c9b5955bc-vnrcb 1/1 Running 0 3m24s

metallb-system metallb-speaker-22lwz 4/4 Running 0 2m56s

metallb-system metallb-speaker-4jgnf 4/4 Running 0 2m58s

metallb-system metallb-speaker-6cm5m 4/4 Running 0 2m55s

metallb-system metallb-speaker-fxq4t 4/4 Running 0 2m58s

Notes

- Always check container runtime config first

- Debugging:

- Network connectivity

- Container runtime

- kubelet logs

- Pod logs

- Keep join tokens somewhere safe

- Screenshot working configs (trust me)

Up Next

- Planning HA control plane

- Need to figure out:

- Load balancer for API server

- Backup strategy (probably should have thought of this earlier)

Note to readers: This is a not a tutorial. Your mileage may vary, and that’s the fun part!